Title

Introduction to Interpretable AI

Lecturer

Mara Graziani, mara.graziani@hevs.ch

Henning Müller, henning.mueller@hevs.ch

Organizer/s

Institute of Information Systems, University of Applied Sciences of Western Switzerland

Course Duration

16 hours

Course Type

Short Course

Participation terms

The course is organized for self-study. If you need supervision on the assignments, please fill contact the lecturers and we will do our best to provide you with proper supervision.

Lecture Plan

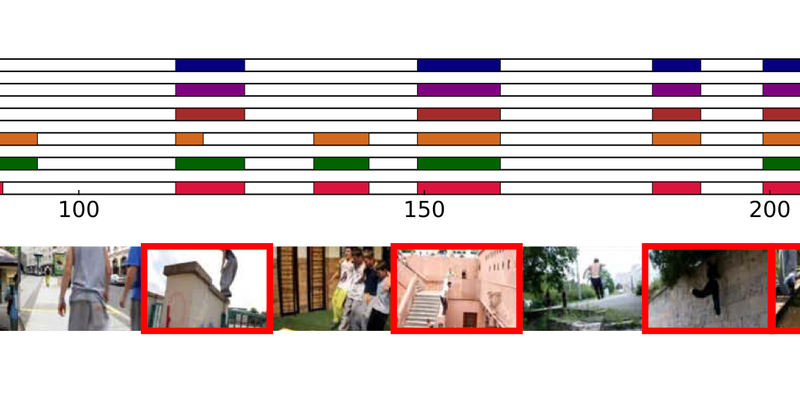

1. The “where and why” of interpretability. Reading of taxonomy papers and perspectives. 2. The “three dimensions” of interpretability. Activation Maximization, LIME surrogates, Class Activation maps and Concept Attribution methods seen in details with hands-on exercises. Mara Graziani 3. From attention models and eye tracking to explainability. Invited talk by Prof. Jenny Benoit Pineau 4. Concept-based interpretability 5. LIME for Medical Imaging data, presentation by Iam Palatnik (Ph.D.) 6. Causal analysis for Interpretability, presentation by Sumedha Singla (Ph.D. student) 7. Pitfalls of Saliency Map Interpretation in Deep Neural Networks, presentation by Suraj Srinivas (Ph.D.) 8. Evaluation of interpretability methods. Reading of papers and hands-on exercises.

Language

English

Modality (online/in person):

Online

Back to List

Back to List