Learn how to evaluate your Deep Neural Networks explanations!

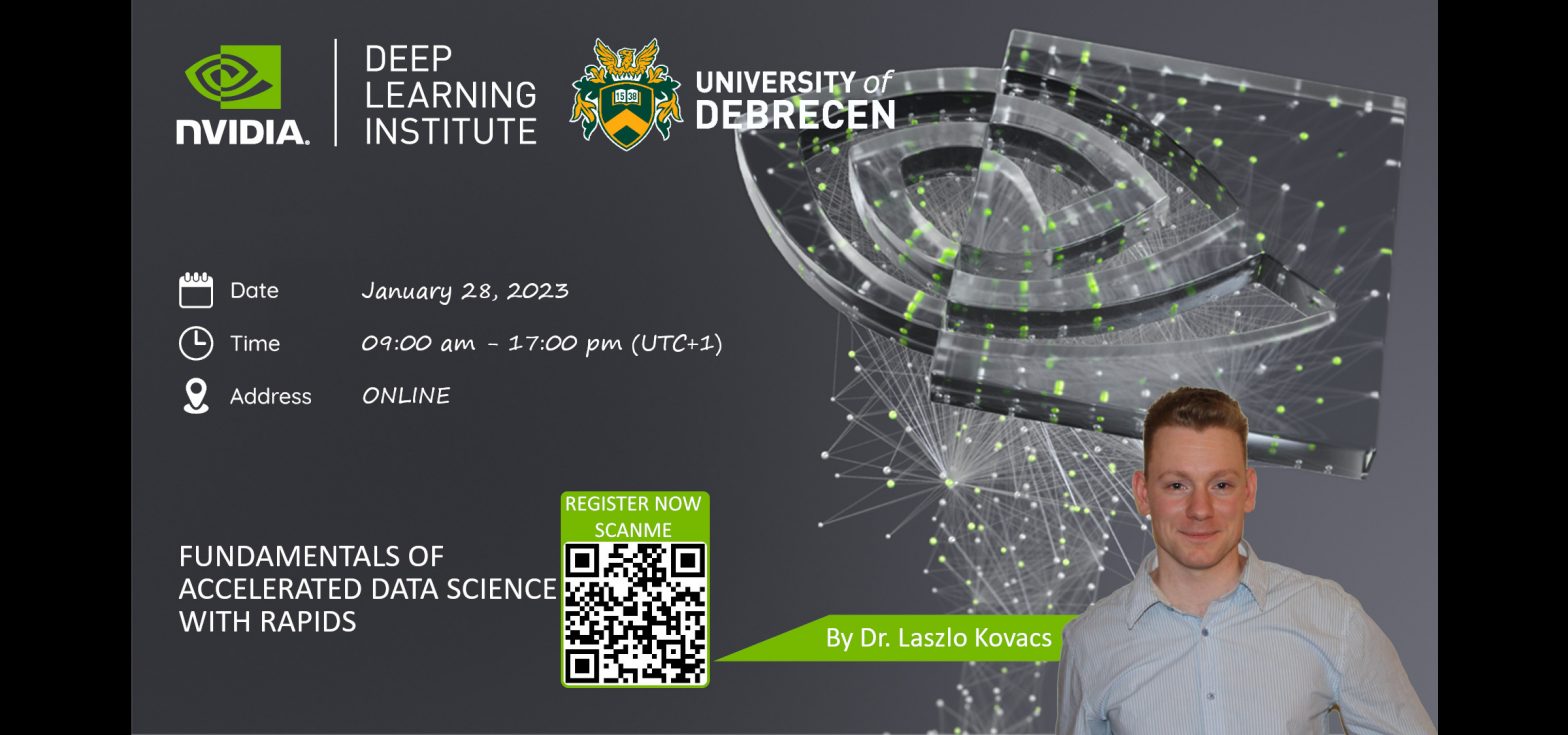

Lecturer

Marco Zullich, m.zullich@tudelft.nl

Emily Schiller, emily.schiller@xitaso.com

Content and organization

This course is tailored for Ph.D. candidates who are using, or are interested in using Explainable AI as a part of their research. MSc students with a background in mathematics and statistics can also attend.

In the last decade, the GDPR and the EU AI Act have formalized the concept of transparency in the context of AI models. Transparency, in the narrower sense of interpretability of the predictive logics of a model, can be achieved through white box models—i.e. models whose low complexity makes them human-interpretable. However, these models often lack the predictive power that black box models, such as Neural Networks, possess. Despite their low degree of interpretability, an approximate understanding of the predictive dynamics of these models can be achieved by means of the tools provided by Explainable AI (XAI).

However, a crucial aspect of these tools is the overall difficulty in evaluating, in a formal functional way, the quality of their outputs, which generally undermines trust in them and severely hinders their applicability to safety-critical applications. This course aims at providing an introductory overview on XAI, with specific attention on Neural Networks explainability, then focusing on the various aspects of what defines quality in the context of XAI.

The course will feature 6 2-hours frontal lectures. All lectures will be held from 15:00 to 17:00 CET.

- Jan 12: Refresh on XAI

- Jan 14: Model-agnostic feature importance

- Jan 16: Neural Networks-specific feature importance

- Jan 19: Counterfactual examples and their evaluation – introduction to the Co-12 evaluation framework

- Jan 21: Formal evaluation of properties (e.g., faithfulness, robustness, coherence…)

- Jan 23: Uncertainty Quantification and XAI

Students who need a certificate of completion will need to pass an assessment, which can be chosen between:

- A multiple-choice exam on the topics of the course. (exp. time 1 hr)

- An essay containing a reflection on how XAI is/can be used and evaluated in their research. (exp. time 3 hrs)

Notice that, in order for the certificate to be released, students need to attend at least four of the six frontal lectures.

There is a third part of the course (which brings the total of hours to 18), which can only be attended in person in Delft by attendees who chose to produce an essay. This final part features a group discussion for a mutual reflection on each others’ essays. The group discussion will be held in February, on a date to be determined.

Level

Postgraduate

Course Duration

12-15-18 (depending on assessment mode chosen)

Course Type

Short Course

ECTS

1.5 according to TU Delft PhD EC scheme

Participation terms

If you are an AIDA Student* already, please a) register on the course site AND b) also enroll in the same course in the AIDA system, in order for this course to be included on your AIDA Course Attendance Certificate. If you are not an AIDA Student, follow only the instructions in step (a). *AIDA Students should have been registered in the AIDA system already (they are PhD students or PostDocs that belong only to the AIDA Members list).

Schedule

Monday - Wednesday - Friday, January 12 to 23, from 15:00 to 17:00 CET

Language

English

Modality (online/in person):

Online; in-person may be possible (with priority to TU Delft students)

Notes

Registration form https://forms.office.com/e/eLYUpwYCS3

Back to List

Back to List